A new technology developed by Qi Pan and other researchers at the University of Cambridge allows one to create 3D models on the fly by manipulating an object in front of a webcam. The reconstruction of the 3D model from the video can be viewed in real-time by the user as he moves and rotates the object. The program is called ProFORMA. Pan says the program will be publicly released soon.

The following video gives an excellent demonstration.

Previous work has allowed reconstruction of 3D models from photos or video; however, such work has been limited to offline processing, e.g. the algorithm takes a complete piece of video and builds a 3D model, so the user cannot adjust the video to take in new perspectives until after the whole 3D model is built. The clear advantage of real-time processing (or online processing) is that the user can see the model being built from the video he is recording. He can take more video of the object in different positions to correct any problems in the 3D model as they arise.

Some examples of offline model reconstruction include Microsoft’s Photosynth, Stanford’s Make3D, and the University of Adelaide’s Video Trace.

How does it work?

The program uses a single camera and commodity hardware. The demonstration in the video was performed with a 2.4 Ghz Intel dual core processor and Logitech Quickcam Pro 9000 (640 x 480 @ 15 fps). The program assumes that you are modeling a single object. It cannot model multiple objects simultaneously.

The video camera must be kept stationary and only the object to be modeled is moved and rotated.

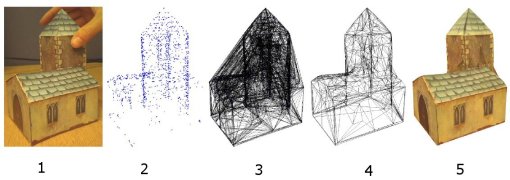

The program can be divided into 5 steps:

- Image capture

- Extraction of point cloud

- Delauney tetrahedralization

- Tetrahedra carving

- Texturing the surface mesh

We will describe each step.

In image capture the goal is to identify the object as separate from the background and the user’s hand and collect enough data to form a point cloud representing the object in 3D space. This is done by sampling many small subwindows of the video (say 5 x 5 pixels), which are called features. Because the camera is not moving, it is easy to determine which subwindows are capturing part of the background because background features will be stationary. The features falling on the user’s hand can be easily identified because the hand moves in and out of the frame and can change shape. The remaining features are clearly part of the object and each is identified as a landmark on the object.

Each landmark specifies a point on the object and taken together they form a point cloud that roughly approximates the shape of the object.

From the point cloud, a process called Delauney tetrahedralization is run, which essentially creates a rough cut of the model that is “too large.” In other words, it has more stuff than necessary as can be seen in step 3 of the diagram above.

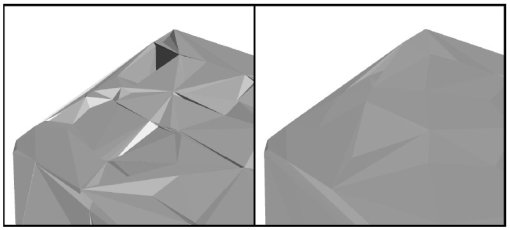

The model is so rough that some landmarks that were observed by the camera would now be obscured if indeed the real-life object looked like the model. So, parts of the model are cut away so that each landmark that was seen by the camera can now be seen on the 3D model. This is the tetrahedra carving stage. One of the primary innovations of the program is a new, more accurate tetrahedra carving algorithm. The picture below shows a model after using an older algorithm for tetrahedra carving (left) and a model after using the researchers’ new algorithm for tetrahedra carving (right). The new algorithm creates a smoother, more accurate model.

The resulting model is a close approximation of the real-life object in many cases. This object can then be skinned with the textures captured from the camera to make the model look life-like.

Note that all of these steps are performed in real-time, so that the user can actually view the 3D model as it is reconstructed by the program.

Results

The program was used to reconstruct 3D models of several objects as seen in the picture below.

Reconstruction of the church took 75 s and reconstruction of the box took 61 s. Most models took about a minute to build including time for video capture.

Limitations

In addition to the limitations noted above, that the camera must be stationary and only one object can be modeled at a time, there are a few other constraints. The program works the best on objects that are highly textured. This is because it uses features to identify landmarks on the object, and in the absence of texture, all features tend to look alike. Second of all, the program generally assumes that at the start of the video the object will be in the center of the frame, though it can later be moved around freely.

[…] Here is the link to a review. […]

Cake should be sturdy enough, but you can insert wooden dowels

or toothpicks on each layer before icing. For instance,

it is an excellent idea to prepare the birthday cake of a

golf lover in the shape of a golf ball in a golf course.

Whenever the mixture becomes too dry and heavy, add a little milk to

lighten it.

I have interviewed a few parents to attempt to understand what their true objection is with their child playing video games.

This means to sell your mods would be considered the

same as pirating the game itself. Moreover, there you could also play Scrabble in the internet, TV game shows or game consoles.

“Worked All Zones Award” is the same concept with time

zones. Next, build a list of prospects and develop a

relationship with those prospects on your list. Yours Truly, Johnny Dollar: The Duke Red Matter (Part One; CBS, 1956)’Abbott Stables’s Duke

Red is a thoroughbred destroyed over a serious injury in an accident, and Dollar (Bob Bailey)’asked to review a $65,000 insurance claim on the horse’smells trouble when the stable’s business manager is dismissed after filing the claim, and its veterinarian may have destroyed the horse a little too swiftly.

Broadcast satellite “in the Star on the 9th,” was

successfully launched in June last year, it can be said is a milestone in the field of live satellite

event. Much of your best players marketplaces in the united states are supervised.

Many people will be happy with replaceable batteries

for home use and occasional outings.

Though the levels look really simple, they are actually quite challenging.

The player who spins the coin is referred to as the spinner.

The nature of Reddit’s platform makes it possible — through great and creative effort, it appears — to give users a gift of the unexpected, rather than deriving humor from humiliation.

And now we’ll be able to help her music selection grow and change right along with her. If you are already experienced in internet radio hosting, the Blog – Talk – Radio Premium might be a hosting program you might want to pay for. Most radio stations on the Internet have offline counterparts.

Pedazo de modelo de mierda que hace el software cutre este que te crea un modelo mas mal hecho que si lo hiciera su abuela agarrando el mouse con el culo….vaya jerna…..te lo dice un modelador de verdad…

the blog is good i like it very mcuh

I bet things are not so easy as showing here..

wonderfull work…. bravo…

Greetings from Greece….

I gotta give it a try soon as it is out.

I have already something in mind to put it to the test.

I will go look at the Imperial College’s offering as well !

Super Awesome, guys and gals !!

The hand look rendered – I think there is something fishy with this movie…

Takeo Kanade published his “A Sequential Factorization Method for Recovering Shape and Motion from Image Streams” that could recover 3D shape from a live video stream in 1994, but it doesn’t take perspective into account very well. I’ve been waiting for someone to take the next step with it.

I believe the kanade factorization didn’t take projection into account very well because the method assumes an affine camera. This assumption is true-ish enough only when the height (or depth depending on perspective) of the objects in the image are << the distance from the camera to the objects. Aerial images for example. This is not the case here and it looks like it works well anyway!

Awesome!

If I were to draw several perspectives of an object on paper and feed those drawings to the camera, would it be able to try and make a 3D model out of it (assuming I draw sufficient texture on it)?

I *think* the algorithm works by selecting some subset of invariant (can be recognized by an algorithm from many different perspectives) features on the image and matching these features between frames. Usually when they say “textured” they mean there are lots of good places to compute features, in practice these are lettering, symbols, strong geometry etc. If you could manage to draw lots of these features in your pictures accurately depicted from different points of view, and could ensure that the algorithm actually chose them to consider, then maybe it could work. I think it may be difficult though.

we honestly need this tech to be public soon, this is a great idea to create objects in games for yourself, or maybee even later for computer graphic/movies

How well does it work on objects with a cavity?

Source or didn’t happen!

But seriously, that’s awesome guys.

Anyone out there think this has the potential to put some gaming graphic artists out of business?

only the ones that suck. it will never have the precision a good modeler does. also, if it does not include the ability to extrapolate information such as bump/normals/specularity/opacity, it’s not very useful yet for high end work. it certainly would have good use for background or midground models that don’t require high detail.

Imperial College have been doing something similar.

It’s less constrained, since it works based on camer motion, a was designed for 3D tracking (although the 3D data is all there, and can certainly be used for modelling and mapping)

http://www.doc.ic.ac.uk/~ajd/

This is *very* cool … have you considered using two or more cameras at the same time to more accurately depict relative curvature? I’m sure using some sort of GPGPU library could dramatically speed up number crunching. Excellent work!

How well does it work on *faces*?

Impressive

Any chance of seeing this work with models bigger than the camera’s FOV? For example, modelling a canyon from inside the canyon?

Phenomenal! How does it discard the information about the hand or surrounding environment?

A guess would be that the hand is moving and non-rigid, so any feature points detected on the hand would not be matched with other feature points detected in frames without the hand. If they are matched, there is probably some kind of robust method used for computing the geometries which would ignore outliers.

The background may be disregarded by some rule saying if a feature point appears in the same location or has the same appearance in successive frames then it is not part of the model geometry. With little exception, the features on the rigid object should all change appearance if any of them exhibit a change.